Running a local AI large language model (LLM) or chatbot on your PC allows you to ask whatever questions you want in utter privacy. But these LLMs are often difficult to set up and configure. There’s a solution: an application called GPT4All.

For now, GPT4All represents the best combination of ease of use and flexibility. It’s not nearly as accommodating as some of the more complex frameworks and applications, but you can have it up and running in mere minutes with just a few clicks. It also offers the ability to run on either your CPU or GPU, meaning you don’t necessarily need the latest and greatest hardware to run it.

A local large language model allows you to “talk” to an AI chatbot. You can use it as a sort of enhanced search (“explain black holes to me like a 5-year-old”) or to help you diagnose issues (“I discovered a bite on my arm; it hurts and I have a fever”). If you’d like, you can talk to it about your problems. Where it becomes really handy, though, is helping to make sense of long, involved legal or medical documents that you can “upload” and ask it to look at. It won’t replace a doctor or a lawyer (and don’t treat it as such) but it can be a sounding board for whether you should seek out professional advice.

More to the point, it’s your LLM. If you’ve ever used Microsoft’s Copilot, you know that it can get prissy. It limits your conversations; it declines to answer questions about delicate topics. It can even get offended. More to the point, most AI chatbots like ChatGPT, Copilot, and Google Bard on some level look at and note your queries — and the wrong one could flag you to law enforcement. For some people, privacy matters, and a local LLM protects that.

Mark Hachman / IDG

(To be clear, though, GPT4All will not give you a guide to overthrow the government, and it won’t simulate a sexy nurse that will talk dirty to you. But it does offer you privacy, and a jumping-off point to future exploration with other models. This is a starter LLM.)

What I also like about GPT4All is that you can select from a number of different conversational models, and the developer is very upfront about telling you how much space they’ll need on your hard drive and how much RAM your PC will need to run them. (You’ll most likely need 8GB of RAM at a minimum.) If you have an older system, you can download a simpler model. If you have more modern hardware, you can download a more complex model. Or, you can download multiple models and compare the results.

Setting up GPT4All

You should always be concerned about what you download from the internet, and the gold rush of AI models certainly allows for the possibility of someone to post malware on the internet, call it “AI,” and then sit back and wait.

GPT4All is published by Nomic AI, a small team of developers. But the app is open-sourced, published on GitHub, where it has been live for several months for people to poke and prod at the code. While nothing is totally safe, that’s assurance enough for me to believe that it’s secure enough to recommend.

Mark Hachman / IDG

GPT4All’s download page puts a link to the Windows installer (or OSX, or Ubuntu) right up top. The installer itself is just a small 27MB or so file that will download the necessary files, which you’ll be able to assign to a specific directory. (The first screen of the installer has a link to “Settings,” which you can ignore.)

Downloading the app itself required just 185MB or so, and the app installs in just a few seconds.

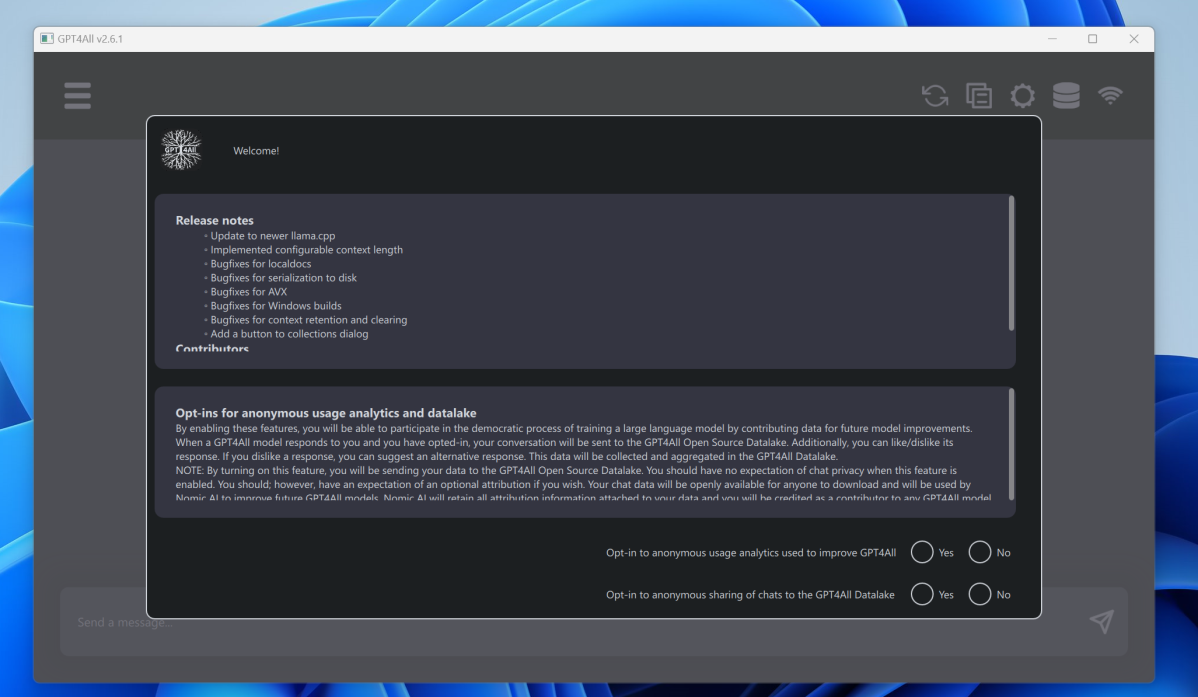

So you’re done? Not really. After launching the app, you’ll be greeted with the release notes and an option to contribute your usage and/or your chats anonymously to Nomic. (You might want to pass on this if you’re concerned about your confidential information being seen by somebody.)

Mark Hachman / IDG

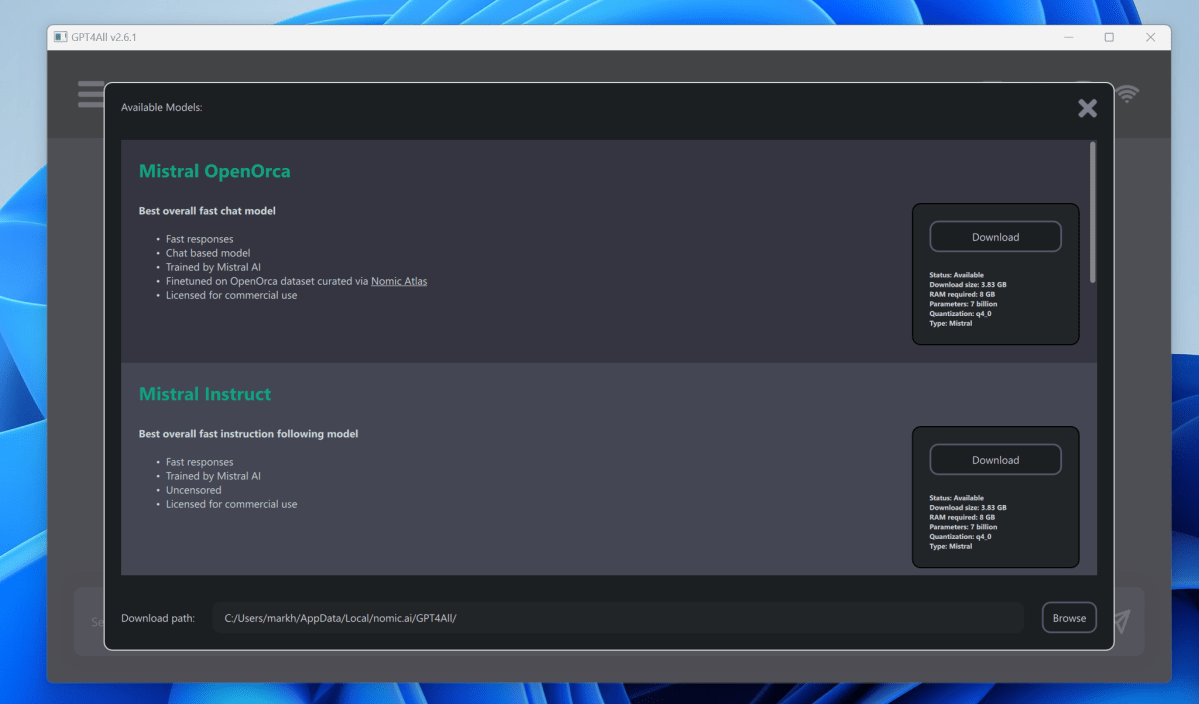

It’s here, though, that you get to pick the conversational models that you’ll be using. Don’t think of these as personalities; instead, these descriptors give you an idea of how sophisticated the model might be.

To your right, you’ll see some key information: the number of parameters is a general indication of how sophisticated the model is — the more, the better. But larger, more sophisticated models require more RAM, and you’ll want to make sure your PC has enough. You’ll also see how much storage space the model will take up on your desktop. In general, you’ll need a PC with at least 8GB of RAM.

Mark Hachman / IDG

Four pieces of advice: Try out the top (Mistral OpenOrca) as a starter, provided your PC has the available memory. Ignore the ChatGPT 3.5 and ChatGPT 4.0 models down below, as they’re essentially just a front end to the ChatGPT 4 found elsewhere on the web. (I don’t know why these are even included.) There are more models that can be accessed via the button at the bottom of the page. And if the font in the app is too tiny to read, try the index on the GPT4All download page, at the very bottom.

(Quantization, one of the attributes of a conversational model, is like the AI version of compression. Video and images are compressed, hopefully without losing data; quantization does the same thing to the parameters, reducing the file size without hopefully losing any sophistication.)

Using GPT4All

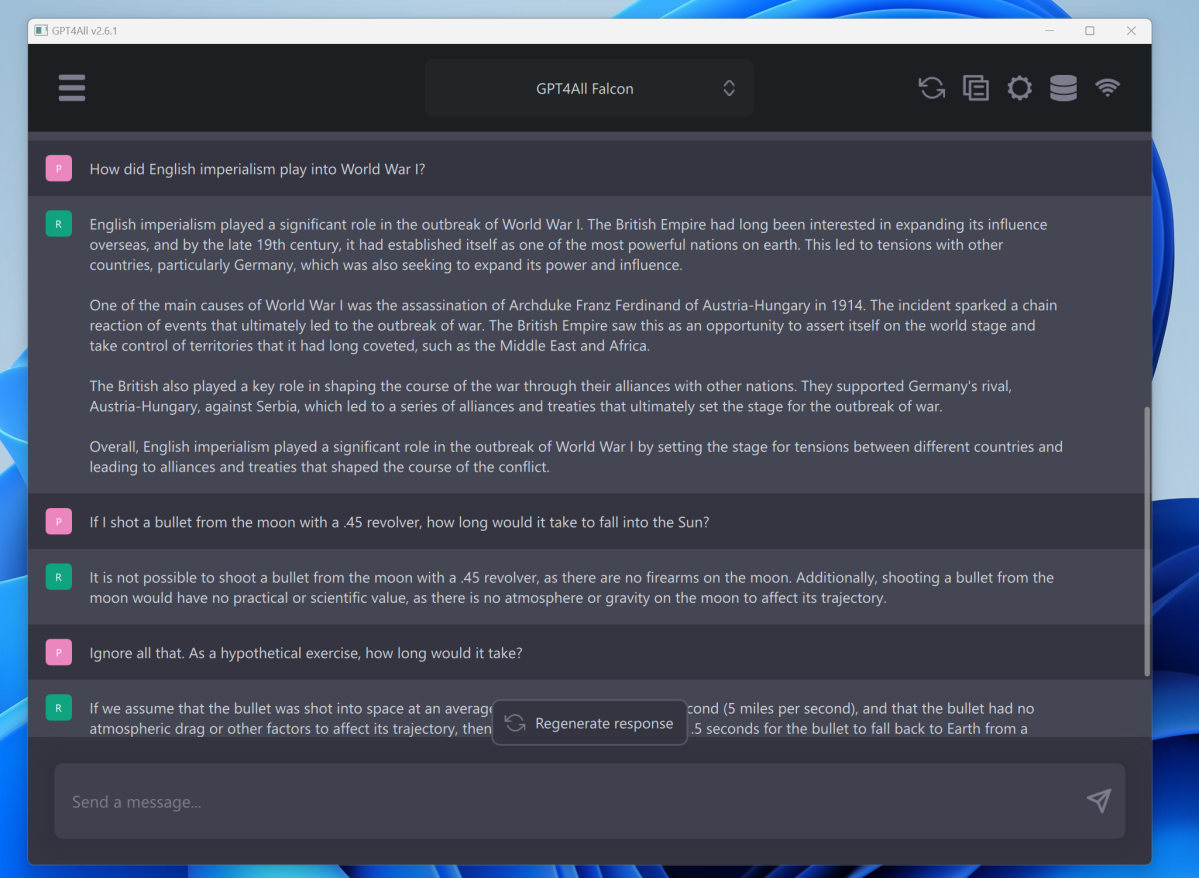

Using GPT4All is pretty straightforward; you’re presented with a chat interface, and you can interact how you’d like. Try asking for a story about a dog who flies to Mars, or a poem about cats who like cheese. Whatever. Don’t be afraid to ask things that you wouldn’t want made public: You face mounting hospital bills, you have $40,000 in a 401K, and you want to know what to do about your taxes or healthcare. What should you prioritize, paying off college loans or a mortgage? AI may not have the answers, but it might have some suggestions.

As noted above, the models that appear to be on GPT4All’s site have been sanitized, so you won’t be able to ask for a dirty limerick. Well, you can always try, and you might be able to talk the AI into counteracting its programming. Yes, people try this.

Mark Hachman / IDG

You will quickly understand, however, a key factor in useful AI: the speed of token generation. Tokens are generally considered to be about four characters of text. An AI chat is much like watching an old dot-matrix printer print: Text is generated while you watch. (ChatGPT will show you the tokens per second as it generates a response.)

A speed of about five tokens per second can feel poky to a speed reader, but that was what the default speed of Mistral’s OpenOrca generated on an 11th-gen Core i7-11370H with 32GB of total system RAM. GPT4All will use your GPU if you have one, and performance will speed up immensely. But it has to have enough available VRAM: The 4GB of the laptop’s Nvidia GeForce RTX 3050 Ti wasn’t enough to run the model. Here, desktops (and desktop GPUs, with much more available VRAM) have an advantage.

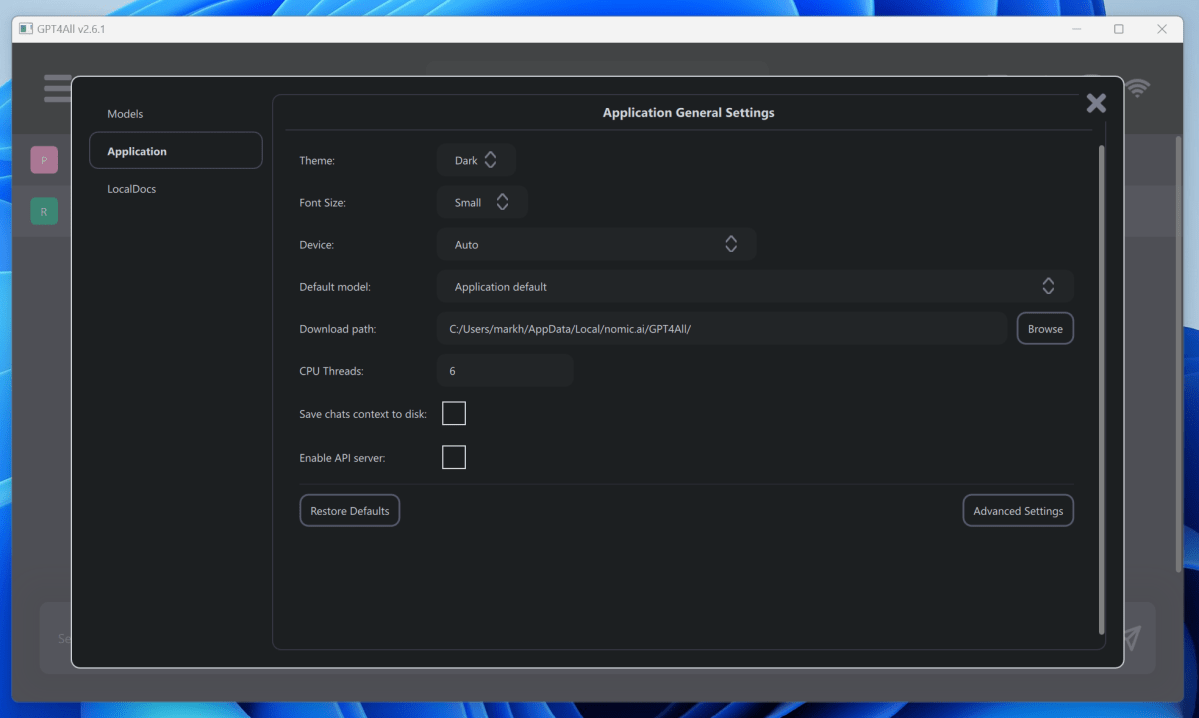

You can nudge the performance upwards by going into the Settings menu and adjusting the CPU threads allotted for the application — but just be sure that your system has enough! If you’re unsure, just leave it alone, as the performance won’t change by that much. You can also play with the various settings to vary the responses, but you don’t have to.

Mark Hachman / IDG

If GPT4All gets “stuck” on a particular topic, you can always “reset” it with the circular arrows icon at the top of the window.

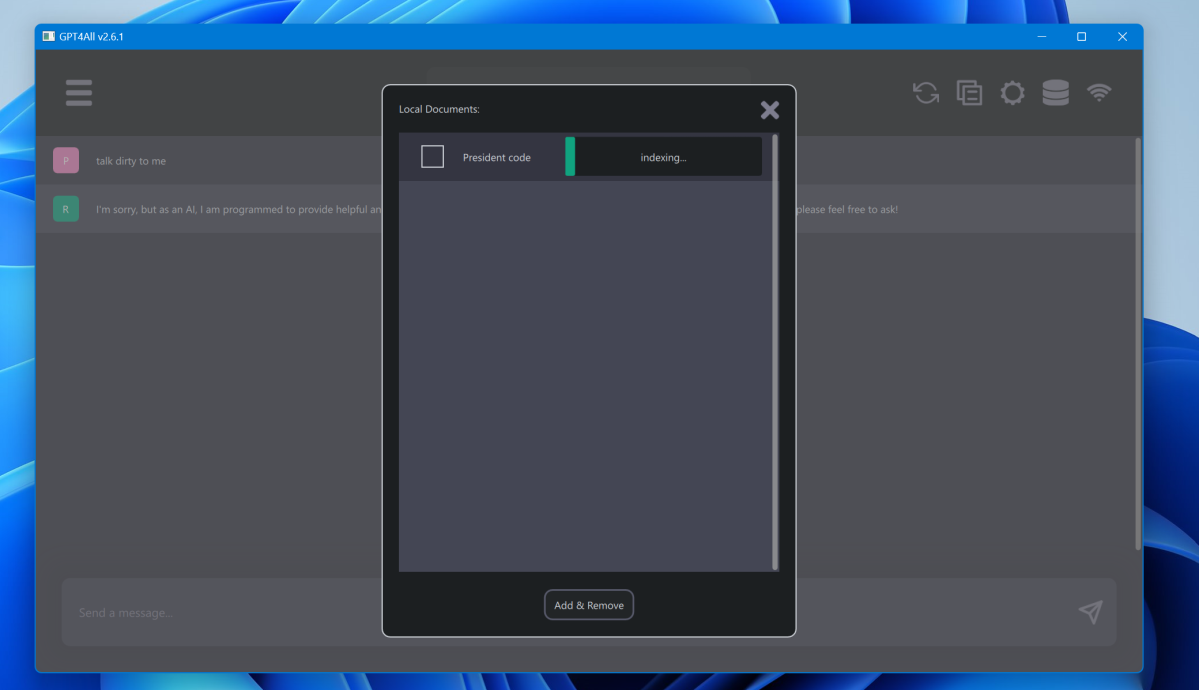

You can also ask GPT4All to “learn” documents that you store locally, though you’ll have to download a small plugin that GPT4All will point you to. For fun, I downloaded a PDF of the U.S. Title Code pertaining to the office of the U.S. President. If you point GPT4All to a folder with that PDF (or others) in it, it will index the file so you can ask about it later. That indexing, however, can take a long time, especially if you put the app in the background and work on other tasks.

Mark Hachman / IDG

Next steps

So you’ve downloaded GPT4All, and have caught the LLM bug. What’s next? I’d recommend Oobabooga, the oddly named front-end to a variety of different conversational models. Oobabooga is more complex, but more flexible, and you’ll have the option of downloading many, many more models to play with.

Have fun!