Intel heard your screams of anguish, PC gamers. Budget graphics cards that are actually worth your money have all but disappeared this pandemic/crypto/AI-crazed decade, with modern “budget” GPUs going for $300 or more, while simultaneously being nerfed by substandard memory configurations that limit your gaming to 1080p resolution unless you make some serious visual sacrifices.

No more.

Today, Intel announced the $249 Arc B580 graphics card (launching December 13) and $219 Arc B570 (January 16), built using the company’s next-gen “Battlemage” GPU architecture. The Arc B580 not only comes with enough firepower to best Nvidia’s GeForce RTX 4060 in raw frame rates, it has a 12GB memory system target-built for 1440p gaming – something the 8GB RTX 4060 sorely lacks despite costing more.

Intel

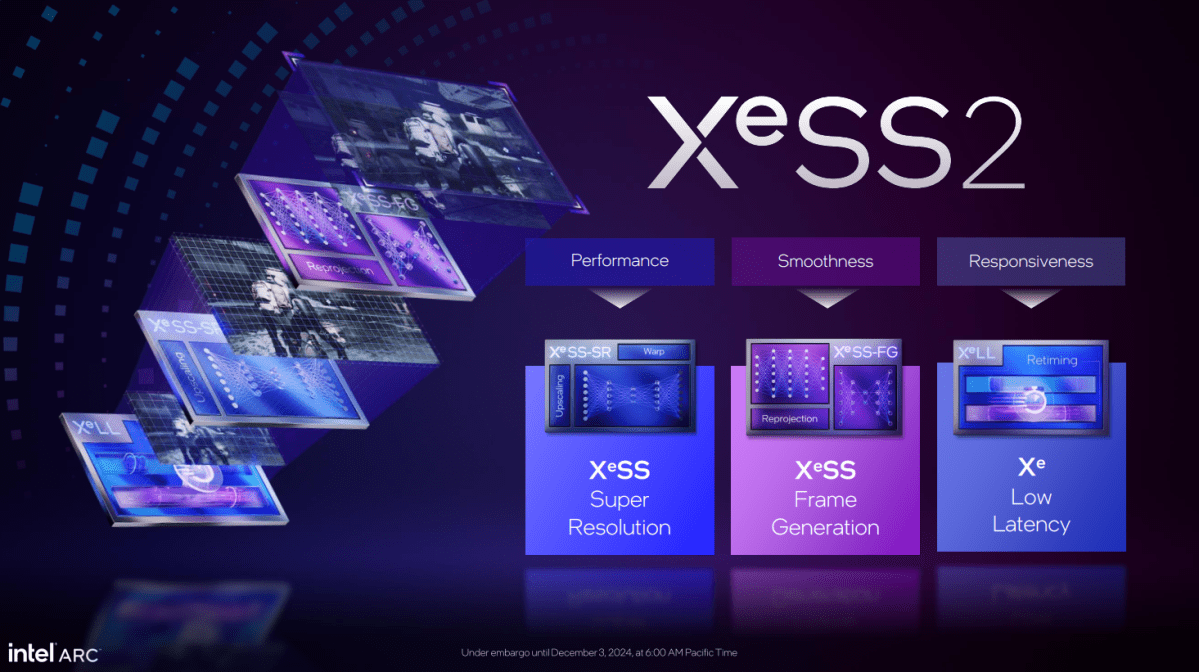

As if that wasn’t an appealing enough combination (did I mention this thing is $249?!), Intel is upping the ante with XeSS 2, a newer version of its AI super-resolution technology that adds Nvidia DLSS 3-like frame generation for even more performance, as well as Xe Low Latency (XeLL), a feature that can greatly reduce latency in supported games.

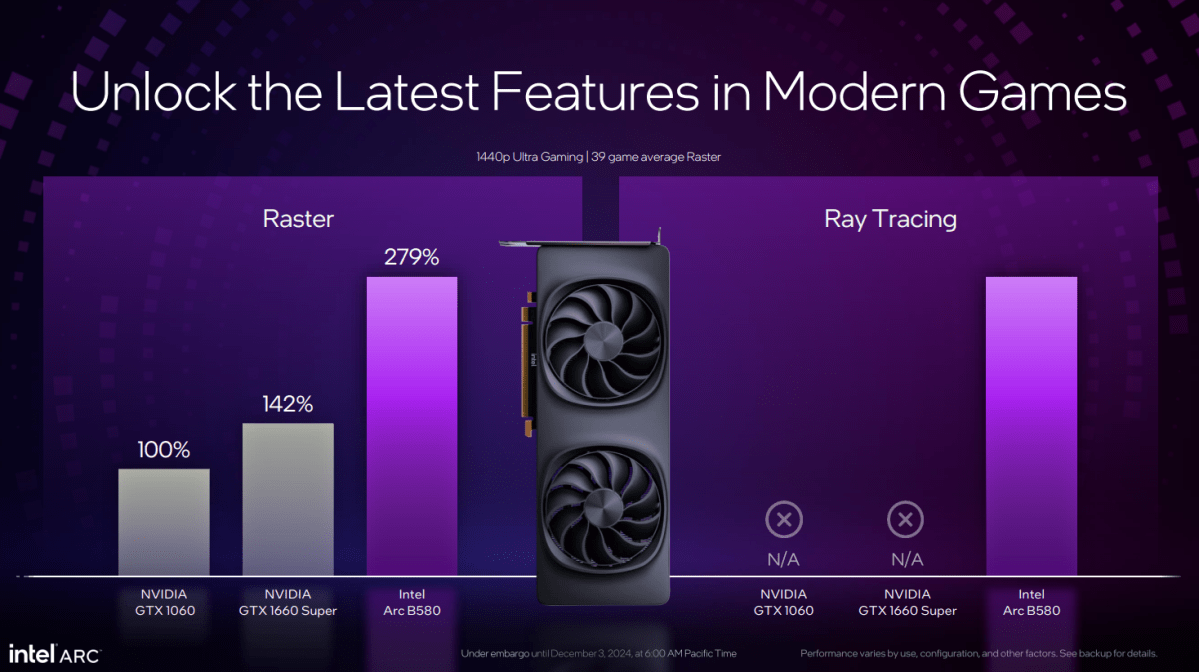

Add it all up and Intel’s Arc B580 seems poised to really, truly shake things up for PC gamers on a budget – something we haven’t seen in years and years. If you’re still rocking an OG GTX 1060, take a serious look at this upgrade. Let’s dig in.

Meet Battlemage and the Arc B580

Intel’s debut “Alchemist” Arc GPUs launched in late 2022, rife with all the bugs and issues you’d expect from the first generation of a product as complex as modern graphics cards. Intel diligently ironed those out over the subsequent months, delivering driver updates that supercharged performance and squashed bugs at a torrid pace.

In a briefing with press, Intel Fellow Tom Petersen said a major force during Battlemage’s development was improving software efficiency, to be better able to unleash the full power of Intel’s hardware. But remember, it ran on first-gen hardware, too. Battlemage improves efficiency on that front, using tricks like transforming the vector engines from two slices into a single structure, supporting native SIMD16 instructions, and beefing up the capabilities of the Xe core’s ray tracing and XMX AI instructions to, yes, make everything run smoother and better than before.

Battlemage’s hardware efficiency improvement are illustrated here, showing how a Fortnite frame runs on the new Arc B580 versus last-gen’s (more expensive) A750 — the time to render a frame dropped from 19ms to a silky smooth 13ms

intel

I’ve included a bunch of technical slides above, so nerds can pick through the details. But here’s the upshot: The Arc B580 delivers 70 percent more performance per Xe core than last gen’s Arc A750, and 50 percent more performance per watt, per Intel.

Cue Keanu Reeves: Whoa. That’s absolutely bonkers. You almost never see performance leaps that substantial from a single-generation advance anymore!

Intel

That’s at an architectural level; the slide above shows the specific hardware configurations found in the Intel B580 and B570. A couple of things stand out here, first and foremost the memory configuration.

Nvidia and AMD’s current $300 gaming options come with just 8GB of VRAM, tied to a paltry 128-bit bus that all but forces you to play at 1080p resolution. The Arc B580 comes with an ample 12GB of fast GDDR6 memory over a wider 192-bit bus – so yes, this GPU is truly built for 1440p gaming, unlike its rivals. The Arc B570 cuts things down a bit to hit its $219 price tag but the same broad strokes apply.

Also worth noting: Intel’s new GPUs feature a bog standard 8-pin power connector (though third-party models may add a second one to support Battlemage’s ample overclocking chops). No fumbling with fugly 12VHPWR connectors here.

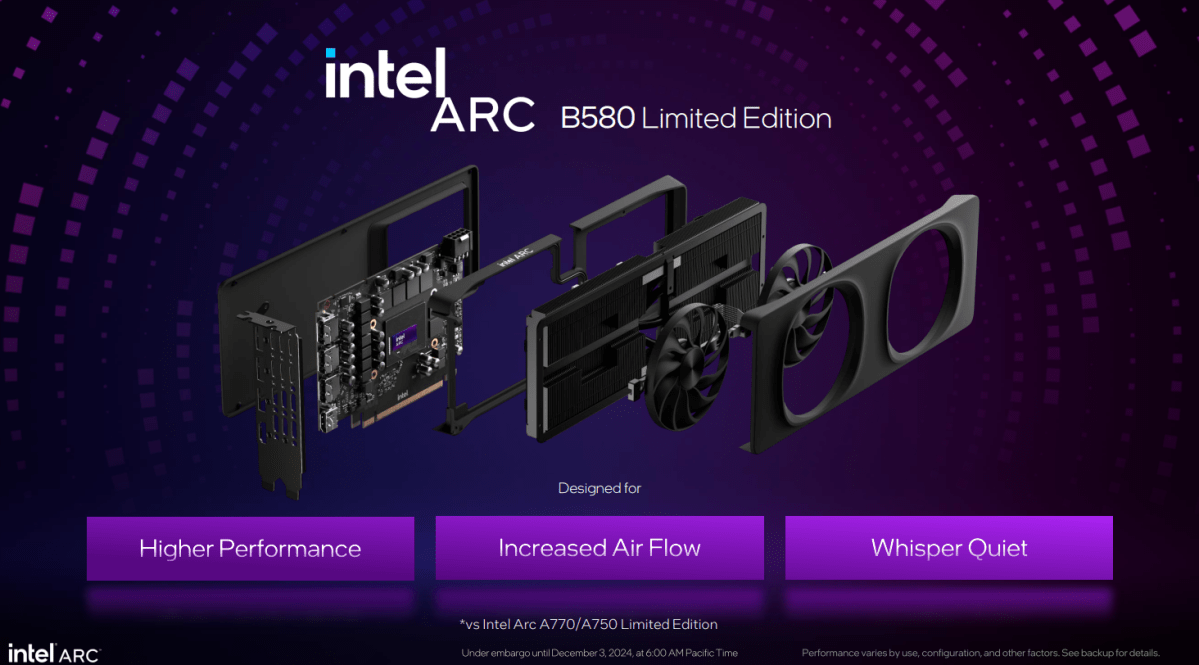

Intel’s homebrew Limited Edition reference GPUs will return for the B580 in a newer, smaller design with blow-through cooling. You’ll also be able to pick up third-party custom cards from the partners shown above, and the B570’s launch in January will be exclusive to custom boards, with no Limited Edition reference planned.

Intel

As part of the launch, Intel is also introducing a redesigned gaming app with advanced overclocking capabilities, including the ability to tweak voltage and frequency offsets.

Intel Arc B580 performance details

Now let’s dig into actual performance, using Intel’s supplied numbers.

Intel says the $249 Arc B580 plays games an average of 25 percent faster than last generation’s higher-tier $279 Arc A770 across a test suite of 40 games. Compared to the competition, Intel says the Arc B580 runs an average of 10 percent faster than Nvidia’s RTX 4060 – though crucially, those numbers were taken at 1440p resolution rather than the 1080p resolution the overly nerfed RTX 4060 works best at.

Intel

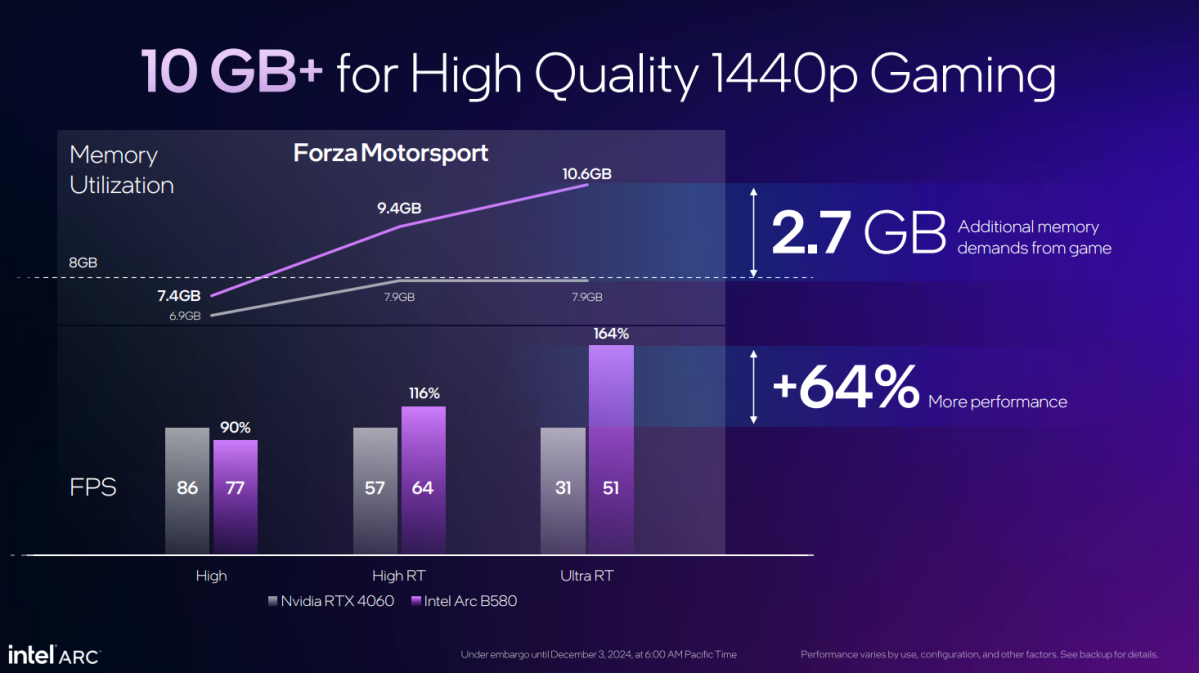

Intel also made a point of stressing how the RTX 4060’s limited 8GB of RAM over a 128-bit bus can directly impact performance today. The slide above shows Forza Motorsport running at 1440p resolution. At standard High settings, the RTX 4060 actually holds a performance advantage. As you scale up the stressors, flipping on ray tracing and moving to Ultra settings, the advantage instantly flips, with the B580 taking a clear, substantial lead while the RTX 4060 hits the limits of what’s possible with its memory setup.

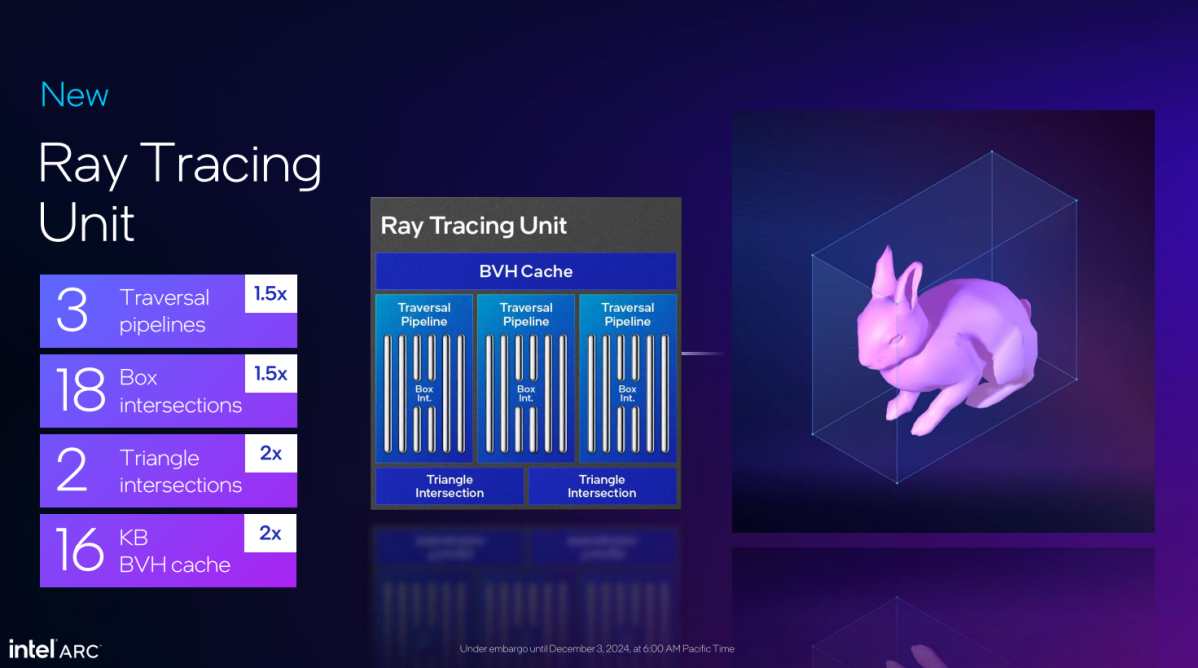

Speaking of, Intel says most of the key technologies underlying ray tracing have been improved by 1.5x to 2x in Battlemage compared to the first-gen Arc “Alchemist” offerings. Considering that Intel’s debut Arc cards already went toe-to-toe with Nvidia’s vaunted RTX 40-series ray tracing, there could be a fierce battle brewing in realistic real-time lighting next year – which isn’t something I’d thought I’d say in the $250 segment before even flipping the calendar to 2025. If you’re still rocking a GTX 1060 or 1650 from back in the day, the Arc B580 would be a massive upgrade in both speed and advanced features like ray tracing.

Raw hardware firepower alone is only part of the graphics equation these days, however. Nvidia’s RTX technology forced the power of AI upscaling and frame generation into consideration this decade – and Intel’s new software features are designed to supercharge frame rates and lower latency even further.

Meet XeSS 2 and Xe Low Latency

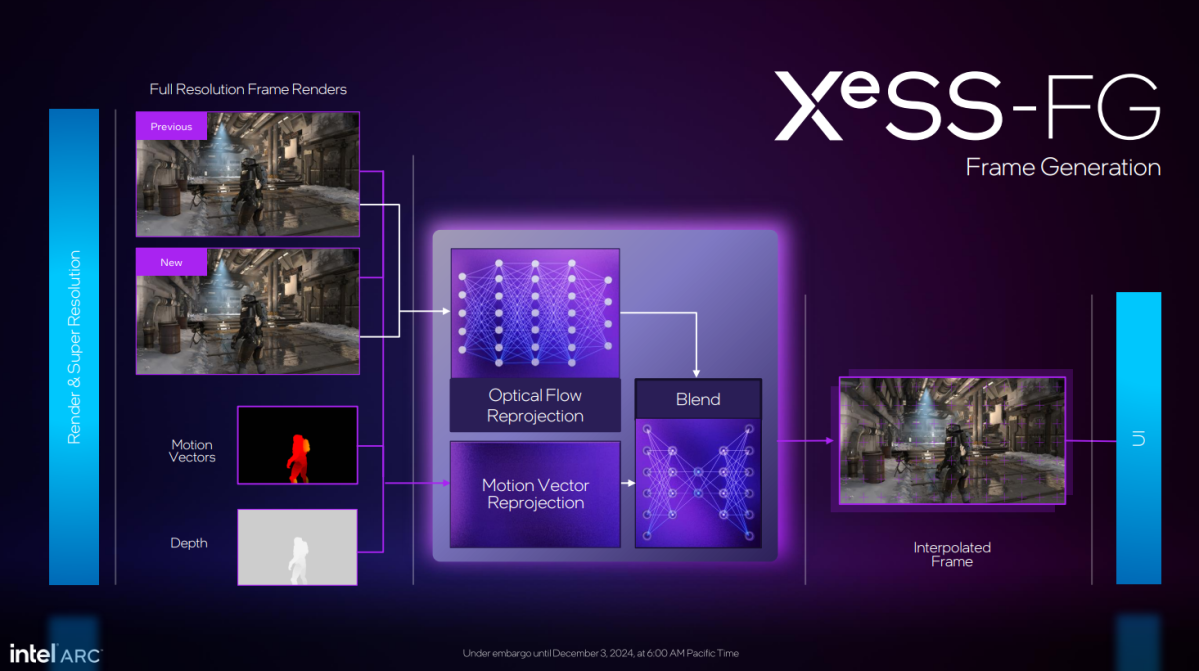

Intel’s XeSS technology debuted alongside the first-gen Arc cards, serving as an AI upscaling rival to Nvidia’s core DLSS technology. (These render frames at a lower resolution internally, then use AI to supersample the final result, leading to higher performance with little to no loss in visual quality.) But then Nvidia launched DLSS 3, a technology that injects AI-generated “interpolated” frames between every GPU-rendered frame, utterly turbocharging performance in many games and scenarios.

XeSS 2 is Intel’s response to that. While DLSS 3 requires the use of a hardware Optical Flow Accelerator only present in RTX 40-series GPUs, Intel’s XeSS 2 uses AI and Arc’s XMX engines to do the work instead – meaning it’ll also work on previous-gen Arc cards, and the Xe-based integrated graphics found in Intel’s Lunar Lake laptops.

Intel

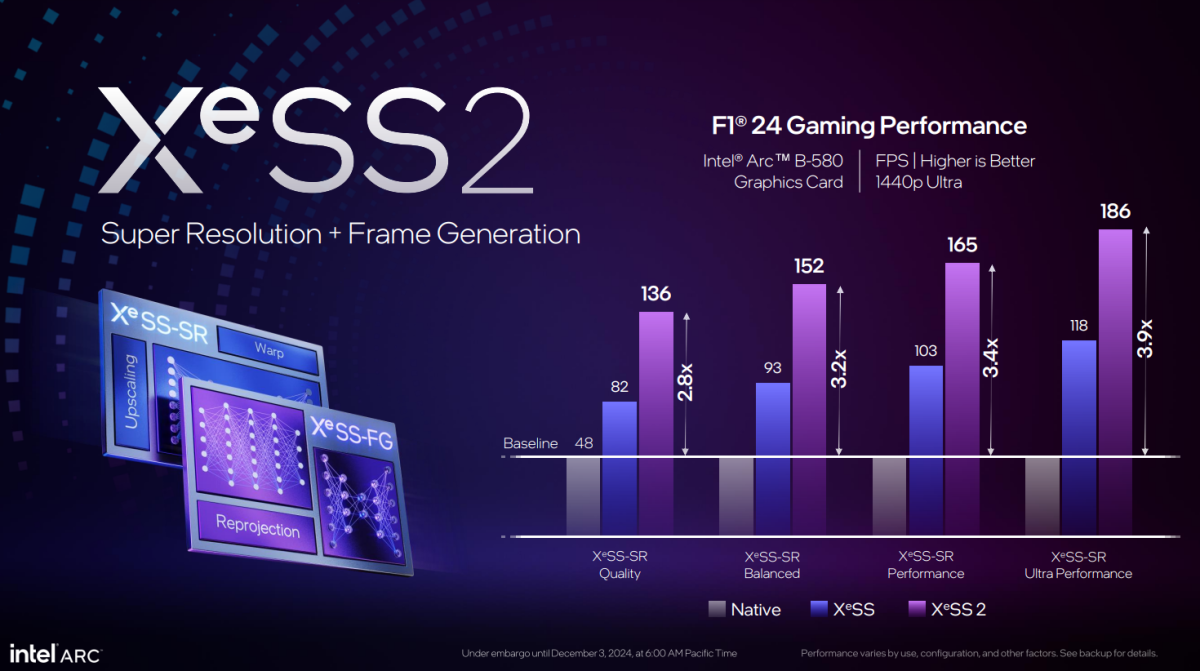

And as we see with DLSS 3, the performance improvements can be outstanding. Intel says that in its in-house F1 24 tests with the B580, activating XeSS 2 with supersampling and frame generation can improve performance by a whopping 2.8x to 3.9x, depending on the Quality setting used. While the game runs at 48fps at the chosen settings without XeSS 2 enabled, turning on XeSS 2’s Ultra quality lifts that all the way up to 186fps – a literal game changer.

Intel

Support for XeSS 2 is coming to the games shown above, with more to arrive in the coming months. First-gen XeSS hit 150 games to date, so the hope is that XeSS 2 (which uses different APIs for developers to hook into) ramps quickly as well.

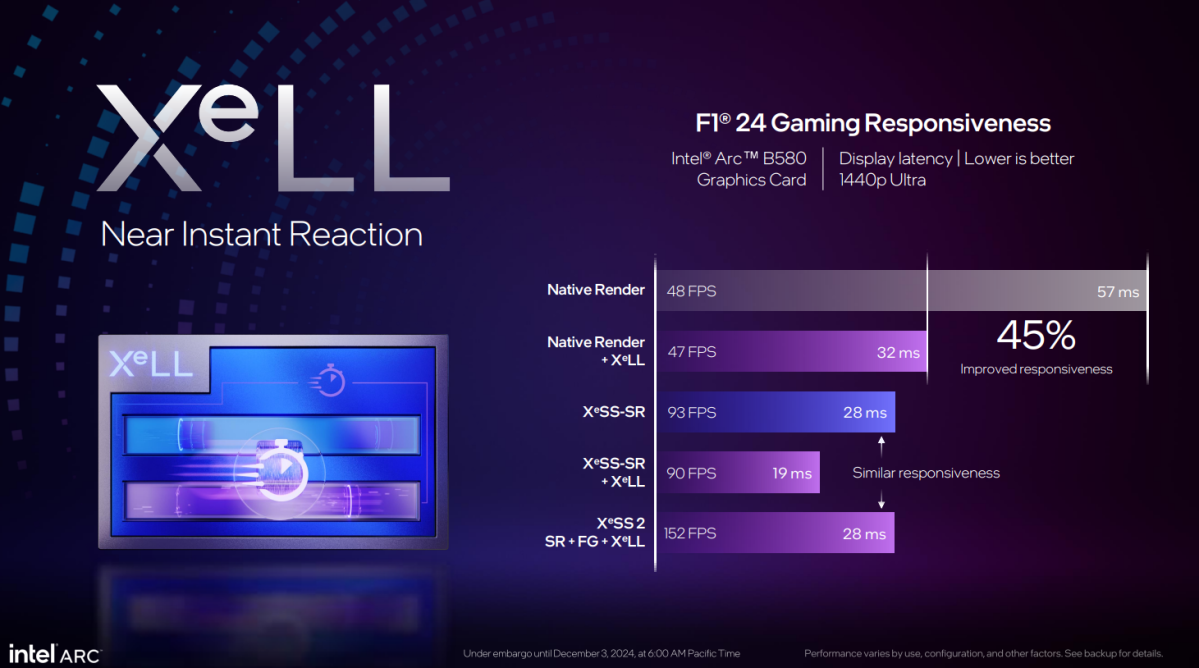

Injecting AI frames between tradition frames has a side effect though – it increases latency, or the reaction between your mouse click and the action occurring onscreen, because the interpolated AI frames can’t respond to your commands. Enter Intel’s Xe Low Latency feature.

XeLL essentially cuts out a bunch of the ‘middleman’ rendering and logic queues that happen behind the scenes in a frame, letting your GPU render a frame much, much faster than typical. (Nvidia’s awesome Reflex technology works similarly.) Activating it drastically lowers latency. You can tangibly feel the improvement in games that don’t have frame generation active, but by enabling it alongside XeSS 2, it claws back the latency created by frame generation.

You can witness the improvements possible in the slide below, which shows the performance of an F1 24 frame with a variety of XeSS features (supersampling, frame gen, XeLL) active. It really illustrates the need for a latency-reduction feature alongside frame generation.

Intel

Latency reduction is so critical to frame gen “feeling right” that Intel requires developers to include XeLL as part of the wider XeSS 2 package, following in Nvidia’s footsteps. As with DLSS 3 and Reflex, you may see the options presented separately in some games, while others will silently enable them together – it’s up to the developer.

Battlemage brings the heat?

Always take vendor numbers with a big punch of salt. We’ve seen vendor benchmark controversies over the years, including this year. Corporate marketing exists to sell stuff to you first and foremost. Hashtag: Wait for benchmarks et cetera et cetera.

All that said, while Battlemage doesn’t push for the bleeding edge of performance, I’m wildly excited by what I see on paper here. Budget GPUs have been an absolute quagmire ever since the pandemic, with none of the current Nvidia or AMD offerings being very compelling. They feel like rip-offs.

Intel

Intel’s Arc B580 and B570 feel like genuine value offerings, finally giving gamers without deep pockets an enticing 1440p option that’s actually affordable – something we haven’t seen this decade despite 1440p gaming becoming the new norm. Delivering better-than-4060 performance and 12GB of VRAM for $250 is downright killer if Intel hits all its promises, especially paired with what looks to be a substantial increase to Arc’s already-good ray tracing performance. And with XeSS 2 and XeLL, Intel is keeping pace with Nvidia’s advanced features – assuming developers embrace it as wholeheartedly as they’ve done with first-gen XeSS.

Add it all up and I’m excited for a truly mainstream GPU for the first time in a long time. The proof is in the pudding (again, wait for independent benchmarks!) but Intel seems to be brewing up something spicy indeed with Battlemage and the Arc A580.