The new Apple Intelligence within the new Apple iPhone 16 provides a surprisingly practical approach to AI. It’s straightforward, helpful, and well, boring — a stark contrast to Microsoft’s Copilot+ PCs, which position themselves as the cusp of a revolutionary new era of computing, complete with a mandatory new keyboard button.

Apple, which touts itself as the foundation for creative work, could have used AI to allow creatives to generate “photos” of imaginary objects, as Google’s Pixel now does. It could have used AI, either running locally on in the cloud, to produce AI-generated art, or AI-produced facsimiles of celebrity voices. It did none of that.

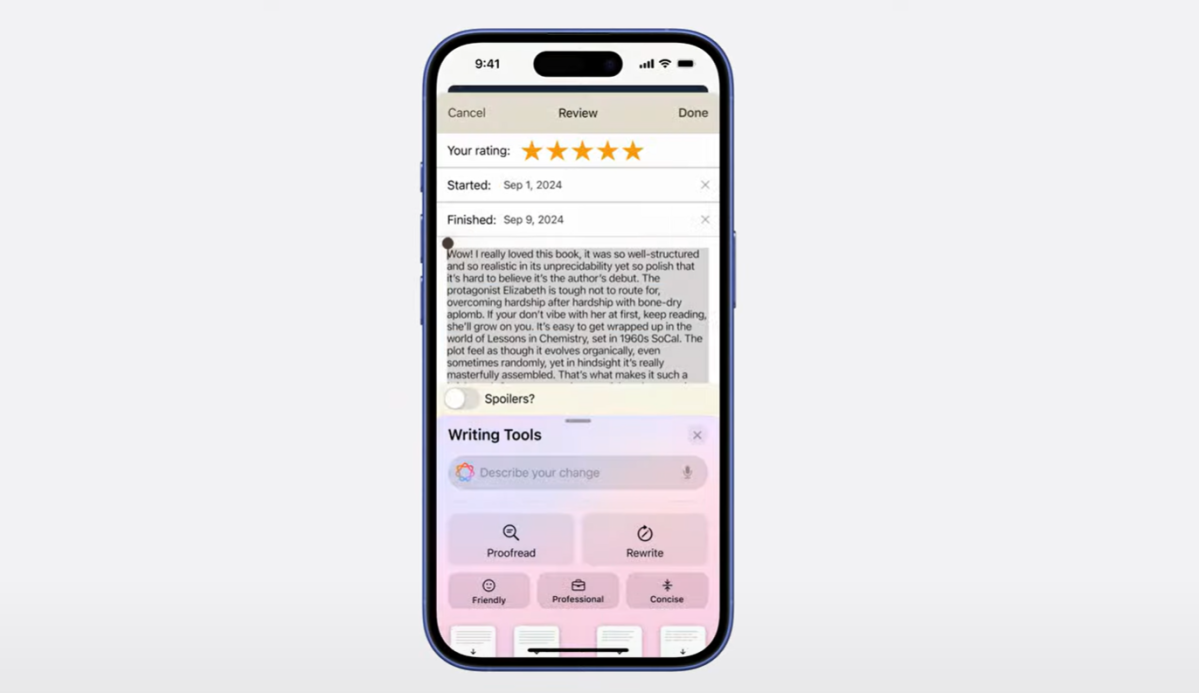

Instead, the Apple iPhone 16 uses AI as a productivity tool, first and foremost, with a focus on supercharging existing features with machine smarts.

Apple is revamping Siri with a new AI foundation to help it better understand what you yourself are looking for, and follow complex conversations. AI organizes your photos into albums of your favorite people. Visual intelligence will summon information about objects you’re looking at. Apple Intelligence will create summaries of your meetings — and in a very quick, blink-and-you’ll-miss-it feature, it even uses a “semantic index” to surface information you’re looking for and forgot about, which is its own version of the controversial Windows Recall feature.

YouTube / Apple

In fact, Microsoft and Apple seemed to have swapped places. While Microsoft splashes generative AI art inside Photos and Paint, Apple presented the ability to rewrite an email as something special!

Apple took such a conservative approach to AI that it barely implemented it all as part of photo or video creation. Yes, you’ll see it in features like live previews of various camera filters, but the only feature I’d associate with traditional AI art was the ability to use generative AI to create your own “genmoji,” or custom emoji. Really, using a prompt to find specific photos and combine them together into a “movie,” or to search your albums for a specific scene that you describe, feels like features that have been in various operating systems and apps for years… because they have been!

Honestly, I kept waiting for more: Maybe the ability to capture the scene of a dancer, say, and then to use AI to remove the dancer and insert her into another video. Yes, Apple showed off the ability to highlight and then remove an object from a photo. But we’ve seen that before, too.

YouTube / Apple

It all felt very muted, and that’s probably not surprising. If Apple had pushed all of its chips in on generative AI as a feature, rather than a tool to enhance other features, it would have risked angering and alienating the cadre of creatives that traditionally turn to Apple and its iPhones. What Apple seemed to imply is that it doesn’t plan to use iOS or the iPhone to promote generative AI itself. Instead, its A18 silicon will instead be the foundation of AI, which app developers can write to. If a developer wants to build AI into its app, and if that user wishes to buy and download that app, that’s fine — Apple’s hands are clean.

Apple’s Craig Federighi, Apple’s senior vice president of software engineering, said that there are “multiple generative models on the iPhone in your pocket.” There most certainly are, doing all sorts of things. But Apple seems determined to let those features speak for themselves, without using “AI” in every other sentence.

Apple, then, is walking a fine line: AI as a productivity solution and a helpful tool that makes existing more powerful is A-OK; AI as a creative aid feels much more controversial. Apple even downplayed Siri’s newfound AI powers. All that leaves an opening to rivals like Microsoft and Google to keep pushing their own AI capabilities. Remember, Apple leans heavily on preserving privacy. Apple appears to be betting that consumers are as distrustful of AI as they are as of tech giants slurping up their data, and acting accordingly.